4 Box-Cox

4.1 Box-Cox

You have likely heard a lot of talk about having normally distributed predictors. This isn’t that common of an assumption, and having a fairly non-skewed symmetric predictor is often enough. Linear Discriminant Analysis assumes Gaussian data, and that is about it (TODO add a reference here). Still, it is worthwhile to have more symmetric predictors, and this is where the Box-Cox transformation comes into play.

In Chapter 2 on logarithms, we saw how they could be used to change the distribution of a variable. One of the downsides is that if we want to get closer to normality, it doesn’t do well unless applied to a log-normally distributed variable. The Box-Cox transformation tries to find an optimal power transformation. This method was originally intended to be used on the outcome of a model.

It works by using maximum likelihood estimation to estimate a transformation parameter \(\lambda\) in the following equation that would optimize the normality of \(x^*\)

\[ x^* = \left\{ \begin{array}{ll} \dfrac{x^\lambda - 1}{\lambda \tilde{x}^{\lambda - 1}}, & \lambda \neq 0 \\ \tilde{x} \log x & \lambda = 0 \end{array} \right. \]

where \(\tilde{x}\) is the geometric mean of \(x\). It is worth noting again, that what we are optimizing over is the value of \(\lambda\). This is also a case of a trained preprocessing method when used on the predictors. We need to estimate the parameter \(\lambda\) on the training data set, then use the estimated value to apply the transformation to the training and test data set to avoid data leakage. Lastly, Box-Cox only works with positive numbers. Take a look at Chapter 5 about the Yeo-Johnson method that tries to accomplish the same thing, and it works on positive as well as negative numbers.

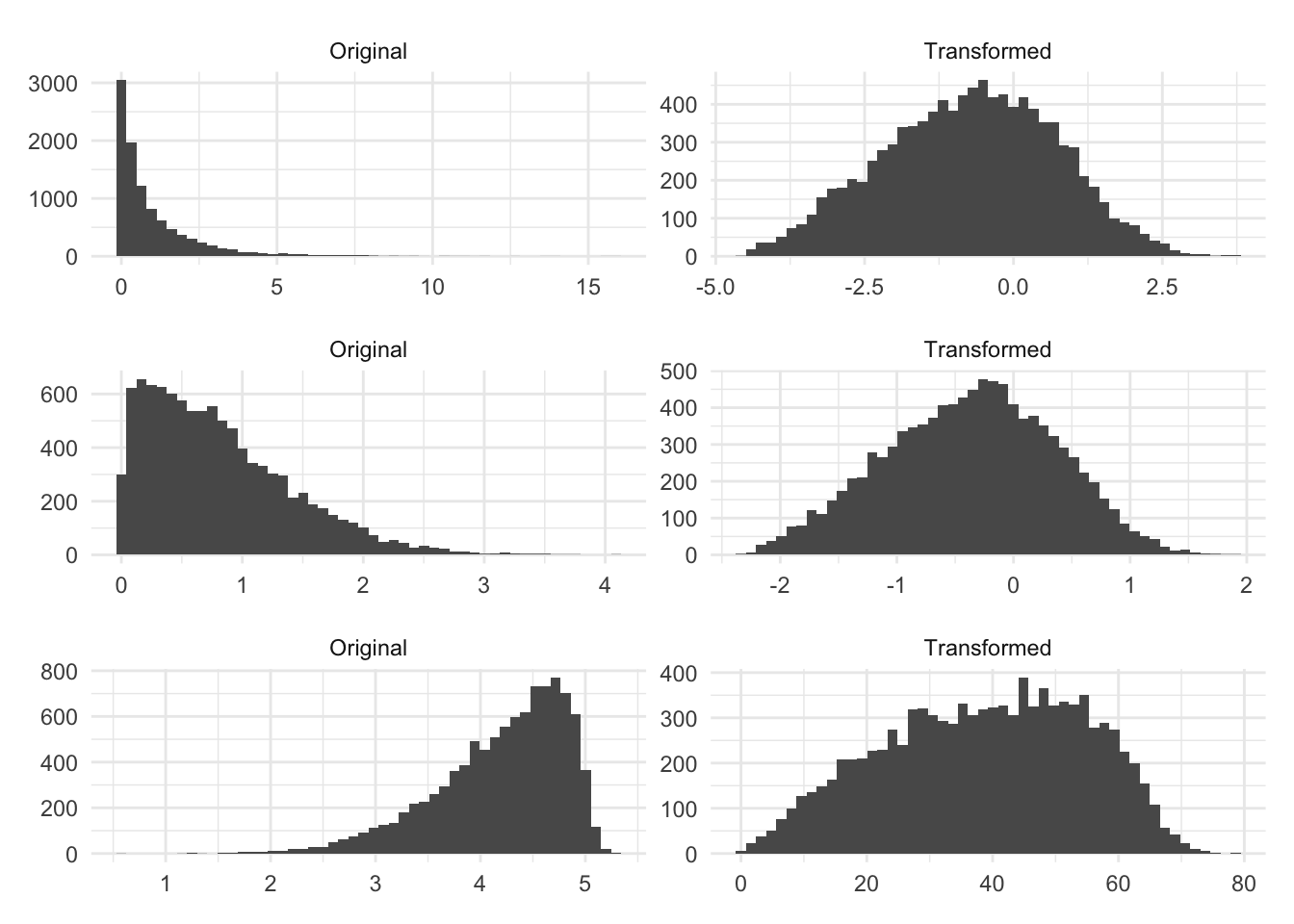

Let us see some examples of Box-Cox at work. Below is three different simulated distribution, before and after they have been transformed by Box-Cox.

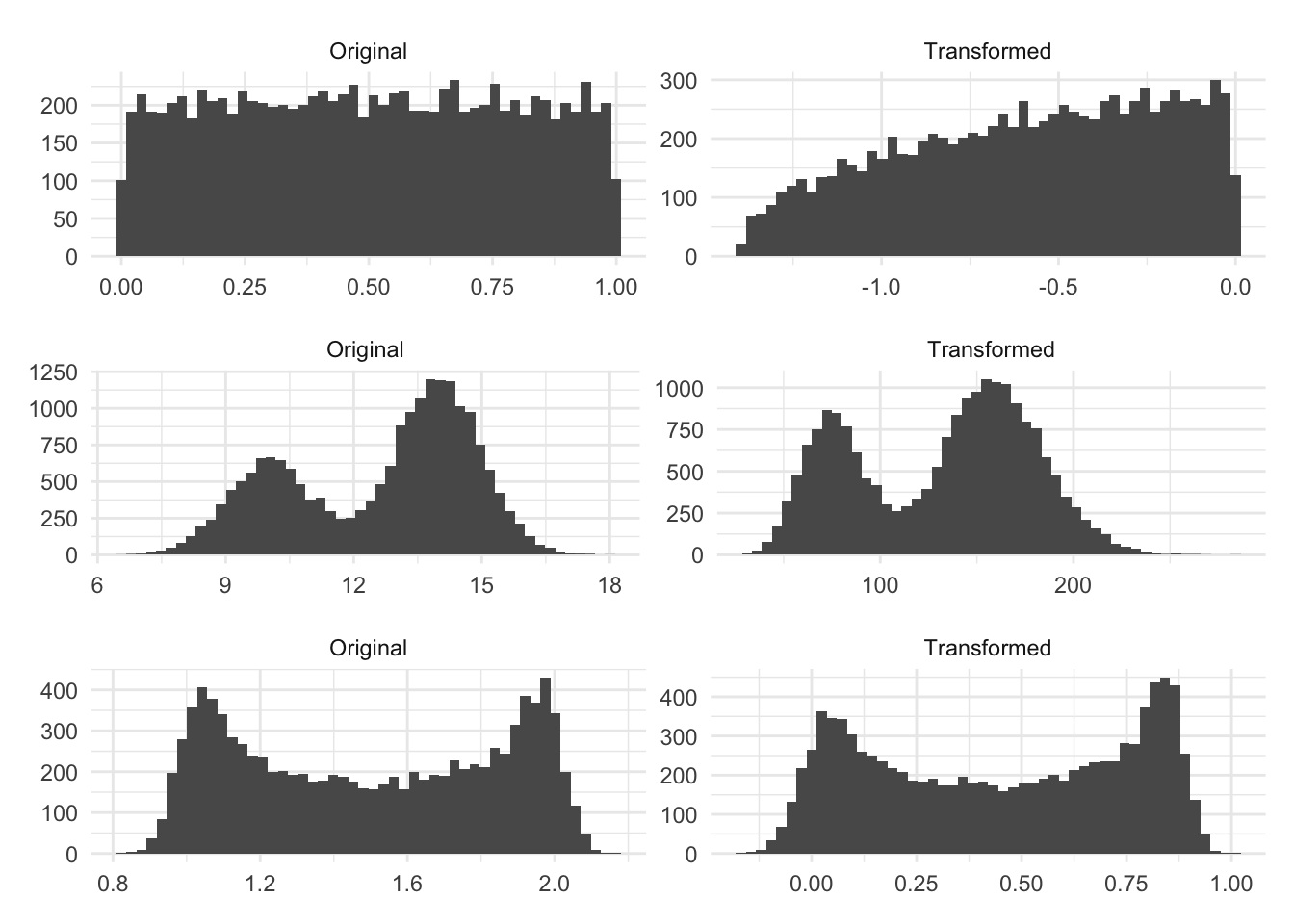

The original distributions have some left or right skewness. And the transformed columns look better, in the sense that they are less skewed and they are fairly symmetric around the center. Are they perfectly normal? No! but these transformations might be beneficial. The next set of distributions wasn’t so lucky.

The Box-Cox method isn’t magic and will only give you something more normally distributed if the distribution can be made more normally distributed by applying a power transformation.

The first distribution here is uniformly random. The resulting transformation ends up more skewed, even if only a little bit, than the original distribution because this method is not intended for this type of data. We are seeing similar results with the bi-modal distributions.

4.2 Pros and Cons

4.2.1 Pros

- More flexible than individually chosen power transformations such as logarithms and square roots

4.2.2 Cons

- Doesn’t work with negative values

- Isn’t a universal fix

4.3 R Examples

We will be using the ames data set for these examples.

library(recipes)

library(modeldata)

data("ames")

ames |>

select(Lot_Area, Wood_Deck_SF, Sale_Price)# A tibble: 2,930 × 3

Lot_Area Wood_Deck_SF Sale_Price

<int> <int> <int>

1 31770 210 215000

2 11622 140 105000

3 14267 393 172000

4 11160 0 244000

5 13830 212 189900

6 9978 360 195500

7 4920 0 213500

8 5005 0 191500

9 5389 237 236500

10 7500 140 189000

# ℹ 2,920 more rows{recipes} provides a step to perform Box-Cox transformations.

boxcox_rec <- recipe(Sale_Price ~ Lot_Area, data = ames) |>

step_BoxCox(Lot_Area) |>

prep()

boxcox_rec |>

bake(new_data = NULL)# A tibble: 2,930 × 2

Lot_Area Sale_Price

<dbl> <int>

1 21.8 215000

2 18.2 105000

3 18.9 172000

4 18.1 244000

5 18.8 189900

6 17.7 195500

7 15.5 213500

8 15.5 191500

9 15.8 236500

10 16.8 189000

# ℹ 2,920 more rowsWe can also pull out the value of the estimated \(\lambda\) by using the tidy() method on the recipe step.

boxcox_rec |>

tidy(1)# A tibble: 1 × 3

terms value id

<chr> <dbl> <chr>

1 Lot_Area 0.129 BoxCox_3gJXR4.4 Python Examples

We are using the ames data set for examples. {feature_engine} provided the BoxCoxTransformer() which is just what we need in this instance. We note that this transformer does not work on non-positive data.

from feazdata import ames

from sklearn.compose import ColumnTransformer

from feature_engine.transformation import BoxCoxTransformer

ct = ColumnTransformer(

[('boxcox', BoxCoxTransformer(), ['Lot_Area'])],

remainder="passthrough")

ct.fit(ames)ColumnTransformer(remainder='passthrough',

transformers=[('boxcox', BoxCoxTransformer(), ['Lot_Area'])])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

ColumnTransformer(remainder='passthrough',

transformers=[('boxcox', BoxCoxTransformer(), ['Lot_Area'])])['Lot_Area']

BoxCoxTransformer()

['MS_SubClass', 'MS_Zoning', 'Lot_Frontage', 'Street', 'Alley', 'Lot_Shape', 'Land_Contour', 'Utilities', 'Lot_Config', 'Land_Slope', 'Neighborhood', 'Condition_1', 'Condition_2', 'Bldg_Type', 'House_Style', 'Overall_Cond', 'Year_Built', 'Year_Remod_Add', 'Roof_Style', 'Roof_Matl', 'Exterior_1st', 'Exterior_2nd', 'Mas_Vnr_Type', 'Mas_Vnr_Area', 'Exter_Cond', 'Foundation', 'Bsmt_Cond', 'Bsmt_Exposure', 'BsmtFin_Type_1', 'BsmtFin_SF_1', 'BsmtFin_Type_2', 'BsmtFin_SF_2', 'Bsmt_Unf_SF', 'Total_Bsmt_SF', 'Heating', 'Heating_QC', 'Central_Air', 'Electrical', 'First_Flr_SF', 'Second_Flr_SF', 'Gr_Liv_Area', 'Bsmt_Full_Bath', 'Bsmt_Half_Bath', 'Full_Bath', 'Half_Bath', 'Bedroom_AbvGr', 'Kitchen_AbvGr', 'TotRms_AbvGrd', 'Functional', 'Fireplaces', 'Garage_Type', 'Garage_Finish', 'Garage_Cars', 'Garage_Area', 'Garage_Cond', 'Paved_Drive', 'Wood_Deck_SF', 'Open_Porch_SF', 'Enclosed_Porch', 'Three_season_porch', 'Screen_Porch', 'Pool_Area', 'Pool_QC', 'Fence', 'Misc_Feature', 'Misc_Val', 'Mo_Sold', 'Year_Sold', 'Sale_Type', 'Sale_Condition', 'Sale_Price', 'Longitude', 'Latitude']

passthrough

ct.transform(ames) boxcox__Lot_Area ... remainder__Latitude

0 21.839 ... 42.054

1 18.229 ... 42.053

2 18.928 ... 42.053

3 18.093 ... 42.051

4 18.821 ... 42.061

... ... ... ...

2925 16.979 ... 41.989

2926 17.343 ... 41.988

2927 17.872 ... 41.987

2928 17.733 ... 41.991

2929 17.604 ... 41.989

[2930 rows x 74 columns]